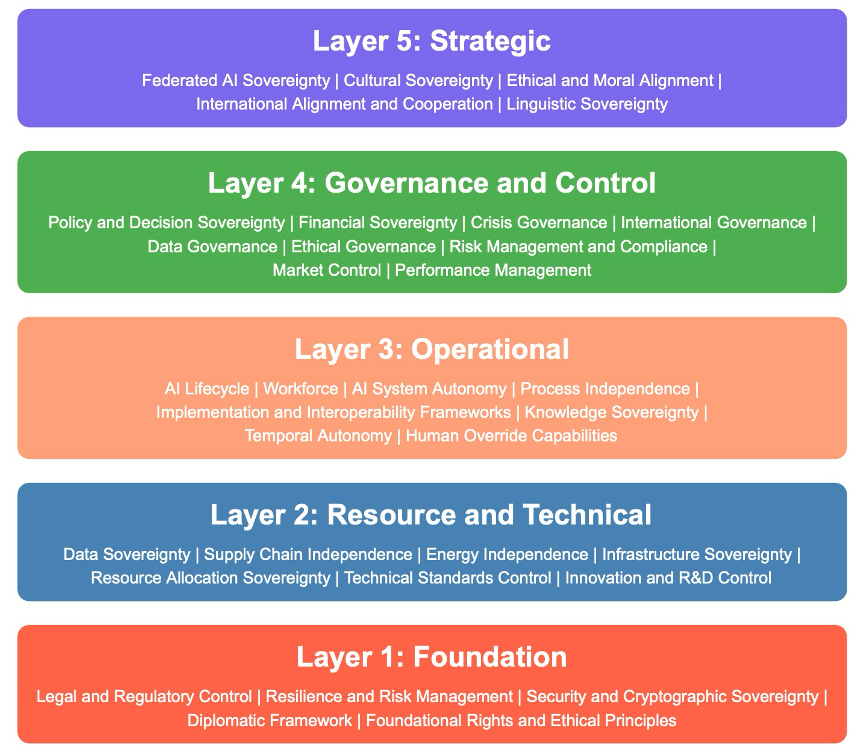

The Sovereignty Imperative: Five Layers of AI Independence

With artificial intelligence becoming increasingly important economically and politically, terms like "Sovereign Cloud" and "Sovereign AI" are frequently used in policy discussions. However, they often lack clear, comprehensive definitions. Many existing definitions—particularly those promoted by technology vendors—fall significantly short of what governments should consider to establish genuine strategic control over AI.

True AI sovereignty extends far beyond data localisation or regulatory compliance. It encompasses a multi-layered framework that enables governments and organisations to maintain strategic autonomy while still participating in the global AI ecosystem. This framework identifies five interconnected layers of AI independence, each building upon the foundational elements of the previous layer.

By understanding these layers, policymakers and organisational leaders can make informed decisions about where sovereignty is essential, where collaboration is beneficial, and how to balance both to serve their strategic interests. This comprehensive approach allows entities to develop AI capabilities that align with their values, protect their interests, and serve their specific needs without unnecessary isolation from global innovation.

Layer 1 - Foundation

The bedrock of AI sovereignty through essential infrastructure and frameworks:

- Legal and Regulatory Control: Authority to independently create, enforce, and update laws and regulations governing AI within a jurisdiction. This enables the development of AI systems aligned with local needs and protects against external laws or commercial interests overshadowing domestic priorities.

- Resilience and Risk Management: Ability to independently anticipate, withstand, and recover from AI-related disruptions or crises. This minimises downtime and economic losses while preserving continuity of critical services such as national security, healthcare, or transportation systems.

- Security and Cryptographic Sovereignty: Full control over encryption standards, security protocols, and cyber defences related to AI operations. This protects sensitive data and systems from foreign surveillance or unauthorised access and ensures local autonomy in deciding the strength and scope of cryptographic measures.

- Diplomatic Framework: The ability and framework to engage in international cooperation and negotiation on AI matters without compromising domestic interests. This facilitates constructive partnerships, joint research, and global data-sharing where beneficial while preventing poorly negotiated deals.

- Foundational Rights and Ethical Principles: Moral and legal guidelines shaping AI's impact on society, such as cultural values, privacy concepts, and justice frameworks. This ensures AI does not conflict with local norms and builds public trust by prioritising fairness, dignity, and consent.

Layer 2 - Resource and Technical

Securing physical and technical independence:

- Data Sovereignty: Control over how, where, and by whom data is collected, stored, processed, and shared. This protects sensitive or strategic data from external exploitation and reinforces privacy and compliance with local data protection laws.

- Supply Chain Independence: Capacity to source and produce critical AI components—hardware, software, and talent—within local or trusted networks. This reduces vulnerabilities tied to foreign suppliers who might withhold technology under political or commercial pressure.

- Energy Independence: Assurance that AI systems can be powered reliably by local or sufficiently diversified energy sources. This is crucial as AI computations are energy-intensive, and stable power is vital for real-time processing and operations.

- Infrastructure Sovereignty: Ownership and control over the physical (data centres, cables) and virtual (cloud, networks) infrastructure powering AI. This ensures high reliability and security within local borders and avoids external control points.

- Resource Allocation Sovereignty: Freedom to decide how funding, compute power, and materials are distributed among various AI projects. This enables prioritisation of national or organisational interests and guarantees critical initiatives aren't sidelined.

- Technical Standards Control: Authority to set or choose the protocols and frameworks governing AI system interfaces, data formats, and safety requirements. This encourages interoperability aligned with local needs rather than foreign-led mandates.

- Innovation and R&D Control: Ability to shape and direct research agendas and development efforts to serve local strategic priorities. This focuses scientific and technological progress on challenges most relevant to local industry, defence, or social initiatives.

Layer 3 - Operational

Managing day-to-day AI operations:

- AI Lifecycle Control: Oversight of each stage of an AI system's life: design, development, deployment, operation, maintenance, and eventual retirement. This prevents external parties from introducing hidden dependencies or controlling crucial updates.

- Workforce Sovereignty: Building and maintaining a skilled local AI workforce capable of working across the value chain. This strengthens domestic capabilities, reduces dependency on foreign experts, and cultivates talent that understands local contexts.

- AI System Autonomy: Determining how much of an AI system's decision-making processes are automated versus guided or overseen by humans. This balances automation to avoid unintended consequences while ensuring critical decisions remain subject to human judgement.

- Process Independence: Freedom to design AI workflows, development pipelines, and governance structures internally. This avoids external mandates that might conflict with local organisational culture and facilitates faster adaptation to local needs.

- Implementation and Interoperability Frameworks: Clear rules for how AI systems integrate, communicate, and scale within an organisation or ecosystem. This prevents fragmentation and locked-in scenarios where certain solutions cannot communicate with others.

- Knowledge Sovereignty: Preserving local expertise, research findings, and intellectual property within borders or trusted networks. This mitigates "brain drain" and protects against the loss of critical know-how to foreign competitors.

- Temporal Autonomy: Ability to decide the timing and pace of AI deployments, upgrades, or decommissions. This avoids premature rollouts or forced adoption schedules dictated by outside factors.

- Human Override Capabilities: Safety valves or manual controls allowing people to intervene if AI behaves unexpectedly or dangerously. This maintains a check on potential AI malfunctions, bias, or unethical decisions.

Layer 4 - Governance and Control

Directing strategic AI development:

- Policy and Decision Sovereignty: Autonomy in setting AI strategies, regulations, and overarching goals at the policy level. This aligns AI initiatives with national priorities and avoids external influences that might skew decision-making away from local values.

- Financial Sovereignty: Control over how AI-related funding, investments, and capital flows are managed locally. This ensures critical projects can be adequately financed without foreign strings attached.

- Crisis Governance Frameworks: Formal procedures to handle AI emergencies or large-scale system failures. This ensures swift, coordinated responses, minimising damage and restoring normalcy quickly.

- International Governance Frameworks: Structured rules for cooperation with other nations and global entities on AI matters. This enables beneficial partnerships and shared innovations in a clear, legally supported manner.

- Data Governance Frameworks: Policies governing data lifecycle activities—collection, usage, sharing, retention—under a consistent set of rules. This protects individual rights and upholds public trust in data handling.

- Ethical Governance Frameworks: Practical guidelines for ensuring moral and cultural principles are integrated into AI design and deployment. This encourages accountability, fairness, and respect for societal norms throughout AI's lifecycle.

- Risk Management and Compliance: Ongoing processes to identify, assess, and mitigate legal, ethical, and operational risks in AI. This reduces liability, financial losses, and reputational damage stemming from AI missteps.

- Market Control: Mechanisms to foster fair competition, regulate monopolies, and set guidelines for private-sector AI offerings. This prevents market capture by large foreign or domestic players that may drive innovation in harmful directions.

- Performance Management: Measuring and evaluating AI systems to ensure they meet predefined targets for accuracy, speed, and fairness. This identifies areas needing improvement and highlights whether AI investments deliver intended benefits.

Layer 5 - Strategic

Enabling international engagement while preserving autonomy:

- Federated AI Sovereignty: Collaborative AI initiatives where each party retains ownership over key components or insights. This facilitates knowledge-sharing and resource pooling without relinquishing critical control over sensitive technology or data.

- Cultural Sovereignty: Ensuring AI systems respect and incorporate local customs, languages, and cultural expressions. This preserves national identity and social fabric, preventing cultural erosion and enhancing AI's acceptance across diverse groups.

- Ethical and Moral Alignment: Embedding deeply held ethical standards—beyond baseline legal requirements—into AI designs and decisions. This helps ensure AI operates within the moral compass of the society it serves and avoids conflicts with local norms.

- International Alignment and Cooperation: Engaging with global AI communities for mutual benefit under carefully negotiated terms. This provides access to cutting-edge developments, talent, and shared learning while preventing isolation that can stunt innovation.

- Linguistic Sovereignty: Commitment to develop and support AI technologies in local languages and dialects. This ensures equitable access to AI-powered tools, especially in multilingual societies, and preserves linguistic heritage.

The framework acknowledges that absolute sovereignty may not be necessary or achievable in every domain. Instead, it helps organisations make strategic decisions about where and how to establish sovereign control over their AI capabilities, allowing for flexible implementation based on specific needs and contexts.

Anthony Butler Newsletter

Join the newsletter to receive the latest updates in your inbox.